AI Can Code (But It Doesn’t Care About Quality)

Every engineer now has a code-producing machine in their hands, but volume rarely equates to quality. How do individual contributors and tech leads maintain standards when it’s suddenly much easier to create than it is to edit?

Every engineer now has a code-producing machine in their hot hands - and we’re fast approaching the moment that using AI tools as an engineer is something you can no longer realistically avoid. Even if you somehow manage to avoid using them yourself, you will still need to adapt to the fact that most, if not all, of your colleagues are now using them and contributing to the same codebase you are. The question: what can individual contributors do to help maintain quality when it’s so much easier to create than to edit?

The deluge

When technology reduces the friction and cost of creation, volume explodes - but quantity rarely equates to quality.

AI is no different here. It's being used for pull requests across every conceivable codebase, both public and private, with varying degrees of care for the quality of the contributions it generates. The AI machines can produce plausible output faster than humans can read it and discern meaning and accuracy, and they're much better at producing rather than reducing.

As a tech lead, part of my job is to be a steward of quality in the section of the codebase I oversee. With human contributors, there is always a quality wiggle room – perfect is the enemy of the good, and if we spent hours and hours agonising over each and every change in every bugfix and every feature, we would never release anything. But with human contributors, we can be aware of these tradeoffs, have a shared trust and understanding with one another, and the knowledge that this tech debt may come back around for payment at some point in the future.

AI contributors have none of this. They have no memory, no trust or understanding, no knowledge that what they are doing now may cause some poor sod untold hours of hair-pulling and frustration somewhere down the line. AI tools live in a temporary existence without a history or learned lessons that a human has built up over the years, and there is zero consequence if they get something wrong. Quality is often not synonymous with AI tools. They need careful guidance and curation from a human pilot and guardrails like CLAUDE.md files and the like to ensure a project’s standards are upheld.

If used carelessly, AI contributions can be a huge drain on time and energy of reviewers. AI code takes longer to review, and is less dependable than human-generated code because of the reasons above, which leads to a lack of trust. Reviewers can get into a mindset where they are thinking “If no-one cared enough to write this themselves, why should I care enough to read it?”. Careless use of AI can lead to the erosion of trust and relationships between colleagues over time, and an overall drain on productivity.

A shared responsibility

Code review and software quality is a give and take, a shared responsibility between the contributor and the reviewer. Now, more than ever, we must remember the human factor in software engineering. The contributor’s responsibilities have not changed:

- Code must follow agreed upon styles and conventions and be adequately tested

- The contributor understands and stands by their pull request, accepting to the best of their knowledge that the change is correct, and relevant issues and tradeoffs have been adequately considered

- The contributor has provided adequate context for the change for the reviewer, their colleagues, and their future selves

- The contributor approaches the review with an open mind, expecting potentially extensive feedback and rework, especially when AI is involved

- The contributor understands the complex tradeoff between short-term gain and long-term tech debt when it comes to making any changes in the codebase

Nor have the reviewer’s responsibilities:

- The reviewer should use the opportunity to provide guidance around an area of the codebase that they may already be familiar with or use the opportunity to learn about a new area of the codebase in the process of review

- The reviewer should strive to uphold agreed upon quality standards, styles and conventions, and testing standards

- The reviewer knows that their role isn’t to be the arbiter of perfect code, and sometimes, as long as there has been appropriate communication with the contributor, there are times where the pull request may have outstanding issues, but it’s more prudent to merge it now and come back to it later

How does this relate to AI?

Simply put: nobody is absolved of their responsibilities simply because AI may have written some, if not all, of the PR. The contributor cannot lob a PR over the fence and wash their hands of it, and the reviewer cannot rubber-stamp or avoid a PR just because they don’t want to deal with AI code. A lack of care long-term is poison for all software projects.

A daily reality check

Part of using AI tools is knowing when to stop using them. Often, AI will get stuck on a train of thought (in this way these tools are much like humans), and will continue going down this path that is simply wrong. Sometimes this is because of context poisoning, other times because AI cannot load enough context about the codebase into memory, and other times still because the problem is simply too complex or the prompt not sufficient to arrive at a quality result.

Coding is thinking, and by stumbling through a first draft, in the same way you would when writing an essay, will give you important insights about the problem you are trying to solve in ways that reading the output of an AI tool would not. Working through the problem manually is often more time-efficient than having a long back-and-forth with AI tools.

Doing it yourself, taking breaks, sleeping on it, and other methods can be much more effective and lead to a quality result that you can be much more confident in standing behind when it comes to review time, and the reviewer will appreciate the extra thought put into the solution.

In general terms, AI is better suited to fixing bugs, writing scripts, being a rubber duck or copilot, than it is to writing completely novel creative solutions. Humans are creative beings, it is one of our most precious gifts, along with the ability to cooperate, and to craft tools which have helped us dominate the world (and even tools which can almost appear to think for themselves, or tools to make other tools).

Code is for humans first

While code is ultimately intended for machine consumption, humans are the ones writing it, reading it, and responsible for it, and I don’t see this changing anytime soon. We should, while using AI tooling, still maximise for human understanding and thought. Without this in mind, we will quickly end up mired in codebases where no-one understands the code because no-one has been truly involved in the process of writing or reviewing it, instead leaving it all up to AI.

Then, when something inevitably goes wrong or tech debt becomes unbearable, everyone is building context up from zero, instead of in the current world where there is likely at least one or two people, if not more, who have familiarity. This is a bad place to be, and not conducive to quality. Once the foundations are eaten away by AI termites over time, the whole structure will begin to collapse.

Always try and ask yourself this question: “Would I want to be the person who has to deal with this AI code down the line?” and if the answer is no, then there is still some tailoring that must be made for the humans involved. We must put ourselves both present and future first before the machines if we are to maintain code quality and work sustainably in the age of AI.

Trust, but verify

With all this knowledge, there are ways engineers can work sustainably and with respect for one another and the quality of the work while using AI tools.

When using these tools, or reviewing the work of those who use them, your mantra should always be the Russian proverb famously quoted by Ronald Reagan…”Trust, but verify.”

When reviewing PRs, programmers will conduct “sniff” tests on a daily basis. There are a few ways a PR can fail a sniff test for AI usage:

- Extremely long-winded PR description with a lot of emojis, em dashes, and bullet points

- Excessive use of comments in code, especially in test files

- Wonky code style that does not fit in with other examples in the codebase and common patterns

- Code that looks like it is in a “first draft” state, where it looks as though it was dropped into place without a second look or a refactor

- Signs that the code may not fit in with the “vibe” of the rest of the codebase, that whatever wrote it may not be aware of other parts of the code

- Code that is reinventing the wheel, for example making a new JavaScript modal window pattern when there is already a modal window component in the project

If you notice any of these signs…verify.

Ask the contributor if they used AI here, and if they understand what the code is doing. They shouldn’t be offended; this is merely the reality we all now live in. Provide guidance as you would with any human generated code. Keep the same standard as you would for human-generated code, and do not accept “IDK it’s AI-written 🤷” as a get out of jail free card.

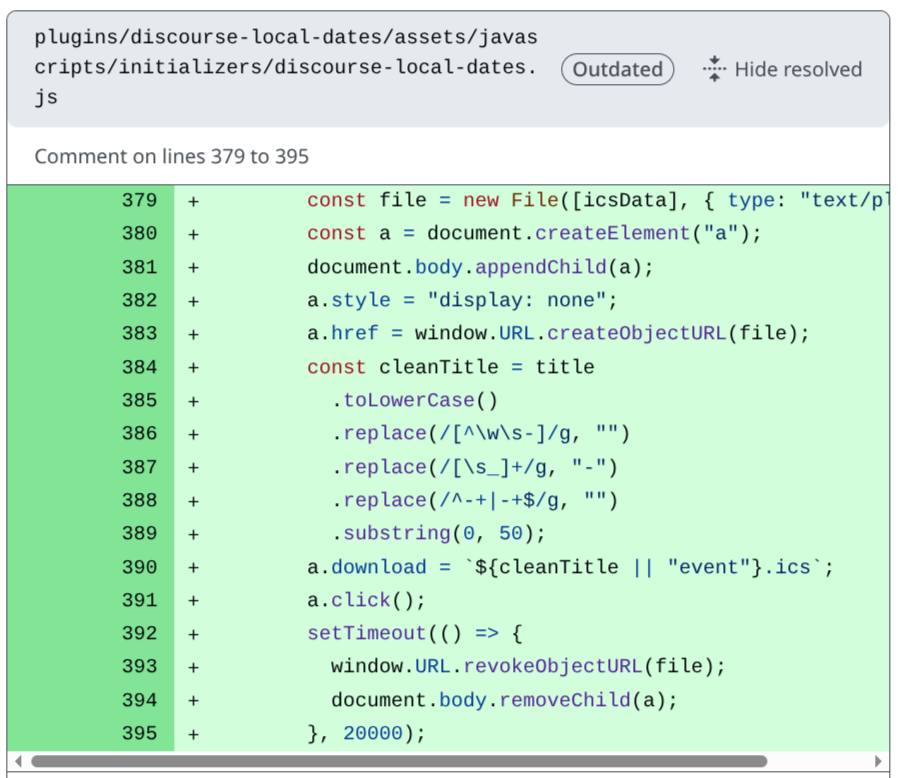

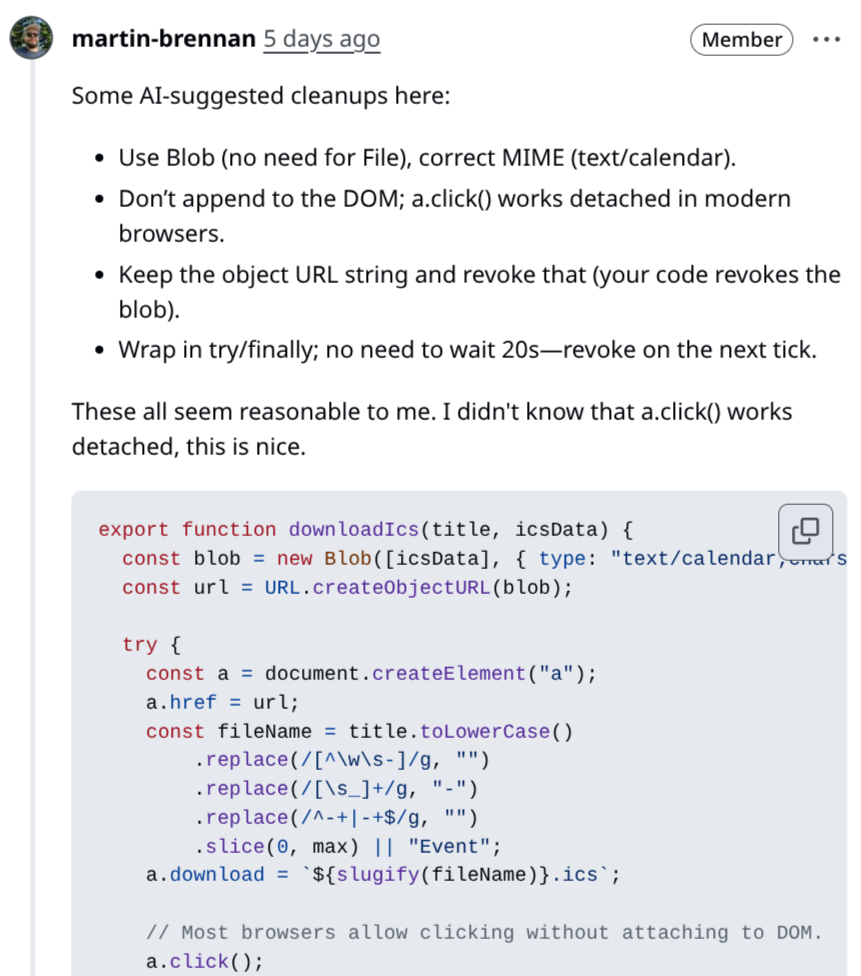

You can even use AI tools to verify other AI output. Here is a practical example from a review I did recently. On the left I saw what looked like AI code, and from experience I thought there could be several improvements, but I didn’t want to spend too much time hunting them down individually. So I fed it to ChatGPT, and asked it if there was a cleaner or more idiomatic way to do this, and incorporated the suggestions into my review after verifying they were correct:

Note that what I did not do is drop a link to a ChatGPT conversation to the contributor, or just send a screenshot of a massive wall of AI text. I used AI to help increase the quality of the contribution, and I did not waste my time or the contributor’s. AI helped, but it did not dominate.

What’s next?

AI-generated contributions will accelerate as more software engineers and designers adopt the technology, and tooling gets better and more integrated with everyday workflows. We are unlikely to reach some AGI summit anytime soon where all software is written entirely by AI, but it should be assumed that with every changeset, the amount of AI used is somewhere along the scale of “not used at all” to “used for every line of code and text”.

To maintain quality, we need to keep this front of mind, and work together as contributors, reviewers, and maintainers, never forgetting the human factor. AI can be a boon and not a curse for software projects; but it’s up to us to make the distinction.