Our Discourse Hosting Configuration

We've talked about the Discourse server hardware before. But now let's talk about the Discourse network. In the physical and software sense, not the social sense, mmmk? How exactly do we host Discourse on our servers?

Here at Discourse, we prefer to host on our own super fast, hand built, colocated physical hardware. The cloud is great for certain things but like Stack Exchange, we made the decision to run on our own hardware. That turned out to be a good decision as Ruby didn't virtualize well in our testing.

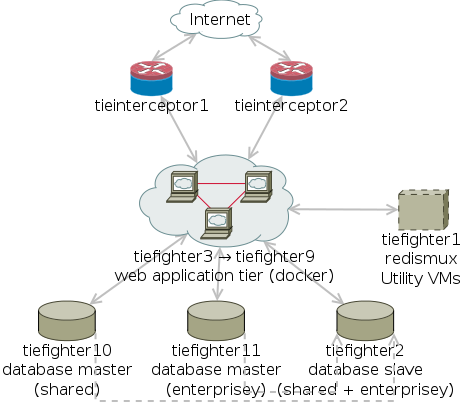

The Big Picture

(Yes, that's made with Dia – still the quickest thing around for network diagramming, even if the provided template images are from the last century.)

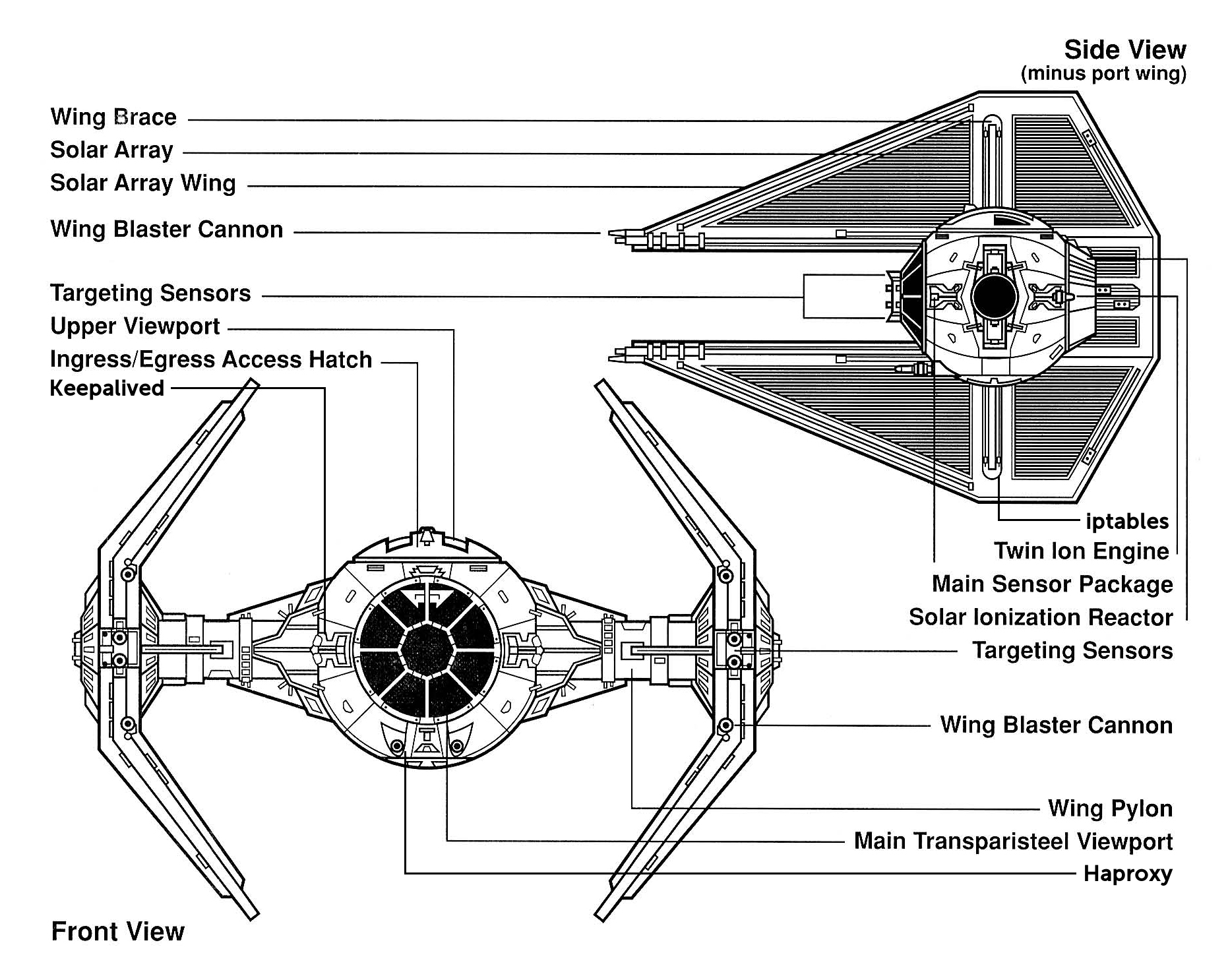

Tie Interceptors

Nothing but the finest bit of Imperial technology sits out front of everything, ready to withstand an assault from the Internet at large on our application:

The tieinterceptor servers handle several important functions:

- ingress/egress: artisinally handcrafted iptables firewall rules control all incoming traffic and ensure that certain traffic can't leave the network

- mail gateway: the interceptors are responsible for helping to ensure that mail reaches the destination. DKIM signing, hashcash (potentially) signing, header cleanup

- haproxy: using haproxy allows the interceptors to act as load balancers, dispatching requests to the web tier and massaging the responses

- keepalived: we use keepalived for its VRRP implementation. We give keepalived rules such as "if haproxy isn't running, this node shouldn't have priority" and it performs actions based on those rules - in this case adding or removing a shared IPv4 (and IPv6) address from the Internet-facing network

Tie Fighters (web)

The tiefighters represent the mass fleet of our Death Star, our Docker servers. They are small, fast, identical – and there are lots of them.

They run the Discourse server application in stateless docker containers, allowing us to easily set up continuous deployment of Discourse with Jenkins.

What's running in each Docker container?

- Nginx: Wouldn't be web without a webserver, right? We chose Nginx because it is one of the fastest lightweight web servers.

- Unicorn: We use Unicorn to run the Ruby processes that serve Discourse. More Unicorns = more concurrent requests.

- Anacron: Server scheduling is handled by Anacron, which keeps track of scheduled commands and scripts, even if the container is rebooted or offline.

- Logrotate and syslogd: Logs, logs, logs. Every container generates a slew of logs via syslogd and we use Logrotate to handle log rotation and maximum log sizes.

- Sidekiq: For background server tasks at the Ruby code level we use Sidekiq.

Shared data files are persisted on a common GlusterFS filesystem shared between the hosts in the web tier using a 3-Distribute 2-Replicate setup. Gluster has performed pretty well, but doesn't seem to tolerate change that well - replacing/rebuilding a node is a bit of a gut-wrenching operation that kind of feels like yanking a disk out of a RAID10 array, plugging a new one in and hoping the replication goes well. I want to look at Ceph as a distributed filesystem store - able to provide both a S3-like interface as well as a multimount POSIX filesystem.

Tie Fighters (database)

We are using three of the Ties with newer SSDs as our Postgres database servers:

- One hosts the databases for our business class (single Discourse application image hosting many sites) containers and standard-tier plans.

- One hosts the databases for the enterprise class instances.

- One is the standby for both of these - it takes the streaming replication logs from the primary DBMSes and is ready to be promoted in the event of a serious failure.

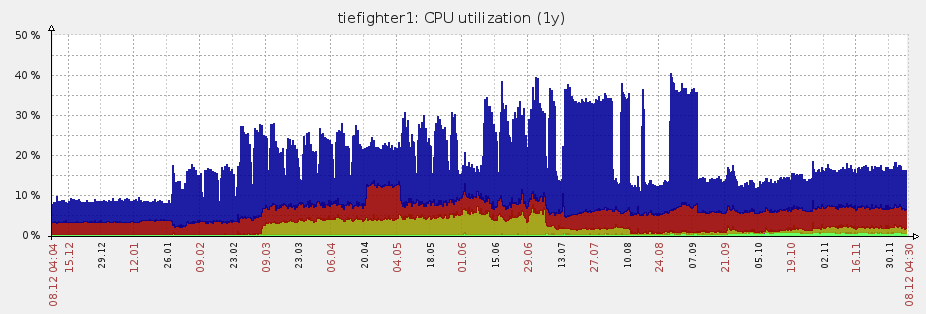

Tie Fighter Prime

The monster of the group is tiefighter1. Unlike all the other Ties, it is provisioned with 8 processors and 128 GB memory. We've been trying to give it more and more to do over the past year. I'd say that's been a success:

Although it is something of a utility and VM server, one of the most important jobs it handles is our redis in-memory network cache.

Properly separating redis instances from each other has been on our radar for a while - they were already configured to use separate databases for partitioning, but that also meant that instances could still affect each other. Notably the multisite configurations which connected to the same redis server but used different redis databases.

We had an inspiration: use the password functionality provided by the redis server to automatically drop any connections using the same password into its own isolated redis backend. A new password will automatically create a new instance specifically tied to that password. Separation, security, ease of use. A few days later, Sam came back with redismux. It's been chugging along after being moved inside docker in September.

Jenkins

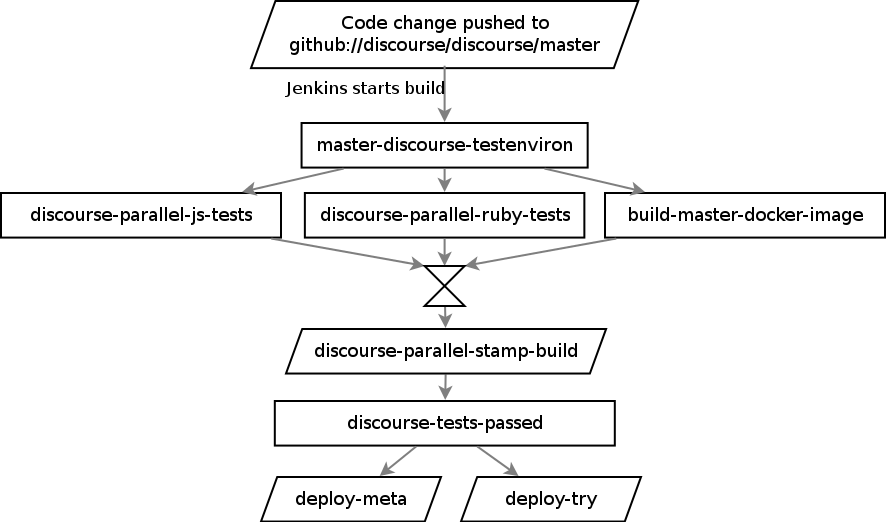

Jenkins is responsible for "all" of our internal management routines - why would you do tasks over and over when you can automate them? Of course, the elephant is our build and deployment process. We have a series of jobs set up that automatically run on a github update:

Total duration from a push to the main github repository to the code running in production: 12 minutes, 8 of which are spent building the new master docker image.

It's taken us about a year (and many, many betas of Docker and Discourse) to reach this as a reasonably stable configuration for hosting Discourse. We're sure it will change over time, and we will continue to scale it out as we grow.