The Discourse Servers

When we moved to our new datacenter, I didn't elaborate on exactly what sort of hardware we have in the rack. But now I will.

There are 13 servers here now, all variously numbered Tie Fighters -- derived from our internal code name for the project while it was a secret throughout 2012.

Tie Fighter 1

This is a very beefy server that we bought first with the idea that we'd do a lot of virtualization on one large, expensive, many-core server.

- Intel Xeon E5-2680 2.7 GHz / 3.5 GHz 8-core turbo

- 128 GB DDR3 1333MHz ECC Reg (8 x 16GB)

- 8 × Samsung 850 Pro 1TB SSD

- LSI 3Ware 9750 8i SAS RAID Controller

Specs:

- 8 x 2.5" hot-swap drive bays

- Dual gigabit ethernet (both connected as peers)

- Integrated IPMI 2.0 ethernet

- 330W gold efficiency power supply

- SuperMicro X9SRE-3F mobo

- SuperMicro 1017R-MTF case

We didn't build this one, but purchased it from PogoLinux where it is known as the Iris 1168. We swapped out the HDDs for SSDs in early 2016.

Tie Fighter 2 - 10

Turns out that Ruby is … kind of hard to virtualize effectively, so we ended up abandoning that big iron strategy and going with lots of cheaper, smaller, faster boxes running on bare metal. Hence these:

- Intel Xeon E3-1280 V2 Ivy Bridge 3.6 Ghz / 4.0 Ghz quad-core turbo

- 32 GB DDR3 1600MHz ECC Reg (4 x 8 GB)

- 2 × Samsung 512 GB SSD in software mirror (830 on 2-5, 840 Pro on 6-11)

Specs:

- 4 x 2.5" hot-swap drive bays

- Dual gigabit ethernet (both connected as peers)

- Integrated IPMI 2.0 ethernet

- 330W gold efficiency power supply

- SuperMicro X9SCM-F-O mobo

- SuperMicro CSE-111TQ-563CB case

I built these, which I documented in Building Servers for Fun and Prof... OK, Maybe Just for Fun. It's not difficult, but it is a good idea to amortize the build effort across several servers so you get a decent return for your hours invested.

Tie Bomber

Our one concession to 'big iron', this is a NetApp FAS2240A storage device. It has:

- two complete, redundant devices with high speed crossover rear connections

- dual power supplies per device

- 5 ethernet connections (1 management, 4 data) per device

- 12 7.2K RPM 2TB drives per device

It's extremely redundant and handles all our essential customer files for the sites we host.

What about redundancy?

We have good live redundancy with Tie 1 - 11; losing Tie 3 or 4 wouldn't even be noticed from the outside. The most common failure points for servers are hard drives and PSUs, so just in case, we also keep the following "cold spare" parts on hand, sitting on a shelf at the bottom of the rack:

- 2 × X306A-R5 2TB drives for Tie Bomber

- 4 × Samsung 512 GB SSD spares

- 2 × Samsung 1 TB SSD spares

- 2 × SuperMicro 330W spare PSU for Tie 1 - 11

It's OK for the routing servers to be different, since they are almost fixed function devices, but if I could go back and do it over again, I'd spend the money we paid for Tie 1 on three more servers like Tie 2 - 11 instead. The performance would be better, and the greater consistency between servers would simplify things.

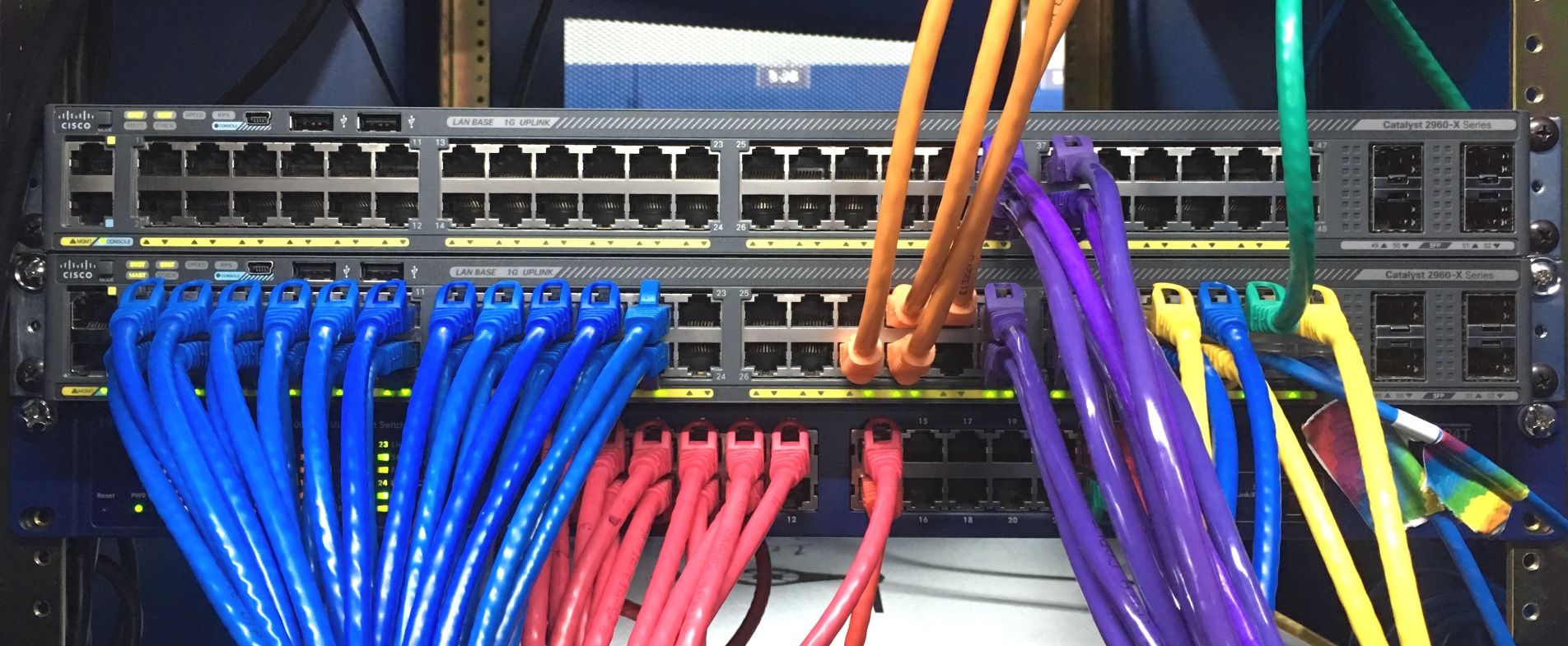

Networking

Cables are color-coded:

█ IPMI VPN, █ private local intra-server network, █ incoming Internet, █ switch cross connect, █ cabinet cross connect, █ NetApp file storage device

- The primary networking duties are handled by a rack mount Cisco Catalyst 2960X 48 port switch.

- We have a second stacked Catalyst 2960X live and ready to accept connections. (We're slowly moving half of each bonded server connection to the other switch for additional redundancy)

- There's also a NetGear ProSafe 24-port switch dedicated to IPMI routing duties to each server.

We use four Tie Shuttle boxes as inexpensive, solid state Linux OpenVPN access boxes to all the IPMI KVM-over-Internet dedicated ethernet management ports on each server. IPMI 2.0 really works!

But… is it webscale?

During our launch peak loads, the servers were barely awake. We are ridiculously overprovisioned at the moment, and I do mean ridiculously. But that's OK, because hardware is cheap, and programmers are expensive. We have plenty of room to scale for our partners and the eventual hosting service we plan to offer. If we do need to add more Tie Fighters, we still have a ton of space in the full rack, and ample power available at 15 amps. These servers are almost exclusively Ivy Bridge CPUs, so all quite efficient -- the whole rack uses around 6-10 amps in typical daily work.

As for software, we're still hashing out the exact configuration details, but we'll be sure to post a future blog entry about that too. I can tell you that all our servers run Ubuntu Server 14.04 LTS x64, and it's working great for us!