Faster (and smaller) uploads in Discourse with Rust, WebAssembly and MozJPEG

As of a few weeks ago, image uploads in Discourse are faster AND smaller thanks to a technique that compresses and optimizes the images clientside before they are uploaded. This blog post will describe how this feature works and how we implemented it in Discourse.

Introduction

While Discourse, and forums in general, are mainly about ¶ paragraphs of text, the discourse on the internet is increasingly composed of media. Pictures have become a major part of user posts. It's also worth mentioning that in the 8 years since we started Discourse, smartphone cameras became ubiquitous and can take great pictures in an instant.

Because of all that, very early into the project we received feature requests about Optimizing images before uploading. This is motivated by staff looking to make it easy for their users to share pictures while being mindful of the time it takes to upload files from spotty mobile connections.

One of the leading experiments of client-side image compression is the Squoosh.app by the cool people at Google Chrome Labs. It was first announced in this talk and it was definitive proof that it was possible to get quality image compression right on the client side by leveraging new browser features like WebAssembly.

Our new image optimization pipeline

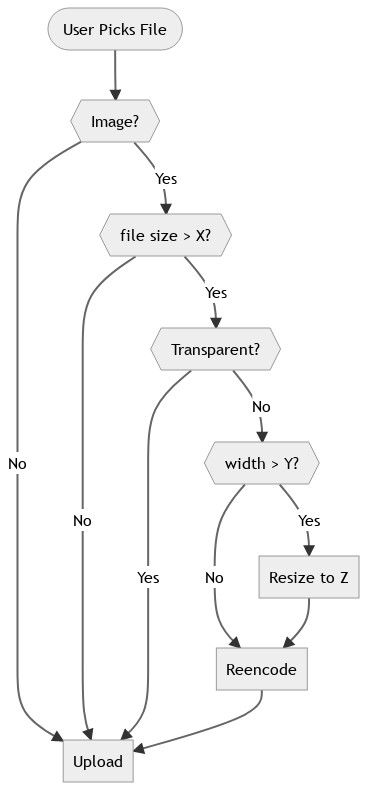

When you click on the upload button while composing a post in Discourse, we open the Operating System file picker and then you can select one or more files to add to your post. If an image is detected, the new feature kicks in and performs the optimization just-in-time before uploading the file to the server.

Here is a 10-foot view of the new flow:

It can be broken down into 3 main steps:

- Decoding

- Resizing

- Encoding

Image Decoding

After we determine that we have a valid image the next step is taking this File object and converting it into a simple array of Red, Green, Blue, and Alpha bytes. The first step is decoding the File, be it a JPEG or a PNG, and luckily for us, all the modern browsers have an incredibly straightforward method that does exactly this: self.createImageBitmap(). It's an awesome browser API that even does the hard work off the main thread, meaning we won't have UI slowdowns when decoding larger images on common devices. This method is not available in Safari, so there we must decode the File using an img tag with the async attribute.

After we decode the image we need to draw the result into a Canvas so we can finally capture the resulting RGBA Array. We considered doing this by using an OffscreenCanvas in a worker, but that is not widely available. (During our internal tests, users also reported completely black images on iOS 15 Beta, i.e. the CanvasRenderingContext2D.getImageData would sometimes return an array where all values are 0. We have added some protections against this, but hopefully Apple will fix this before iOS 15's general release this fall.)

(Also, in an early implementation of this feature, the decoding task was done using WebAssembly, but using the browser native APIs is much better, as we can support multiple image types, and the existing APIs are more than enough.)

Image Resize

Cameras in smartphones are constantly getting better, and this results in pictures with ever-growing dimensions. For example, a Galaxy S21 Ultra can take pictures that are 12000x9000 pixels in size. An image of that size is useful for lots of applications, but it's gigantic for a typical Discourse post body, where the available content width rarely exceeds 700 pixels. This led to our first strategy in the image optimization pipeline: resizing the image to a smaller size.

There are two main configuration knobs here we expose to site admins: the minimum width of an image to trigger the resizing, and the target resize width of an image that will be resized. Our defaults here for both settings are 1920px, which means that we will resize most modern smartphones' pictures, and full-screen screenshots on desktops.

As for performing the actual resize operation, we decided to use the same library Squoosh is using:

This small Rust library implements fast resizing and allows us to downscale using the Lanczos3 method. On the Squoosh app, they packaged the resize using wasm-bindgen, to allow it to be run in a browser context. Unfortunately, they used the wasm-bindgen target of web, which generates a native ES module that can be imported into any modern browser. This threw a bit of a spanner in our plans because we will be running the resize operation in a background dedicated worker and Firefox doesn't support running workers as type: 'module' yet. To work around this situation we had to fork the Squoosh packaging of this library and change the wasm-bindgen target to no-modules. It results in an API that is a bit more cumbersome, but it greatly increases the compatibility.

Image Encoding

Way back in 2014, Mozilla announced a new project: MozJPEG. It's an improved encoder for the decades-old JPEG file format, and doesn't break compatibility with the existing decoders in browsers.

This library is also packaged as WebAssembly in the Squoosh app, here using Emscripten. And, here again, we had to fork the original packaging as it was using ES Modules and wasn't compatible with our default strict Content Security Policy (CSP).

For image encoding, forum admins have yet another knob: the encoder quality parameter. We follow the upstream default of 75, but can be tweaked as needed:

The quality switch lets you trade off compressed file size against the quality of the reconstructed image: the higher the quality setting, the larger the JPEG file, and the closer the output image will be to the original input. Normally you want to use the lowest quality setting (smallest file) that decompresses into something visually indistinguishable from the original image. For this purpose, the quality setting should generally be between 50 and 95 (the default is 75) for photographic images. If you see defects at -quality 75, then go up 5 or 10 counts at a time until you are happy with the output image. (The optimal setting will vary from one image to another.)

Results

Image Samples

Here is a picture taken from my Pixel 3A XL phone, before and after the default optimization in a Discourse site:

The original file is 4032x3024 and weighs 3.7MB, while the compressed one 1920x1440 and weighs 416KB.

Aggregate Statistics

After enabling this feature on some Discourse instances, we were able to measure the resulting file sizes across different categories of sites.

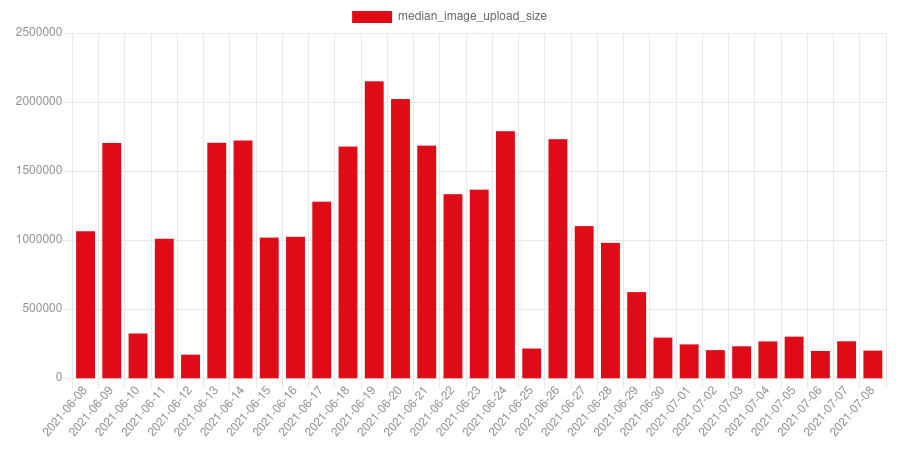

Here are the results in a yo-yo Discourse site, where the feature was enabled on 2021-06-29:

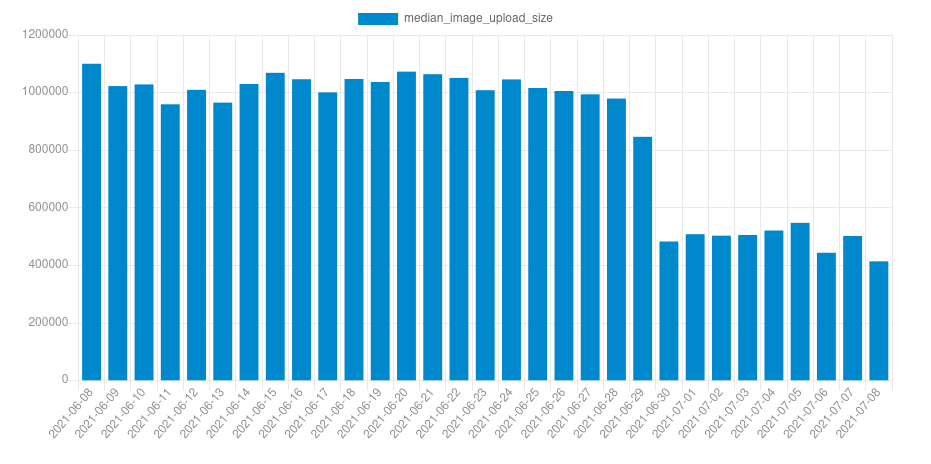

And here on a gardening enthusiast's site, where the feature was also enabled on 2021-06-29:

Extreme examples

Out of the box Discourse limits user uploads to files under 4MB. This is in place for a number of reasons, and one of those is that storage costs can be the main pain point for administrators in certain types of communities. With this new feature, users can directly upload files that are far larger than that and they will automatically be resized to fit those existing limits, allowing seamless interaction from users without becoming onerous for small communities.

On a typical desktop, we have reports of JPEGs and PNGs larger than 50MB successfully being optimized and uploaded to Discourse.

Feel free to try out this feature at our test topic on Meta.

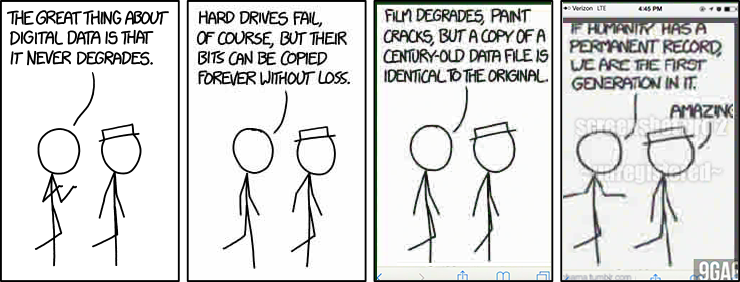

A note on data degradation

While this feature has plenty of upsides, we should not avoid talking about its main downside: data degradation.

We do have quite a few guards against overly aggressive data degradation for this feature:

Minimum image size for triggering: we won't do any change of image with a file size lower than a configurable threshold

Minimum image width for resizing: we won't downscale images that have a width smaller than a configurable threshold

And, it's very important to remember that this feature can be completely disabled by site admins. If your community revolves deeply around high-quality image sharing, you can disable this whole client-side optimization feature with a single click.

Wrapping up

Discourse, as a free and open-source project, couldn't exist without all the software that forms "our stack". With this feature we would like to thank all the software that went into building it: