Discourse MCP is here!

As soon as Anthropic open sourced their Model Context Protocol (MCP) proposal for interfacing with AI agents almost a year ago, we started getting excited about how good of a match it was for Discourse.

Model Context Protocol (MCP) is Anthropic’s open standard for connecting AI assistants to live data sources. For Discourse users, that means Claude can now search your forum, read topics, and use your community’s knowledge to answer questions - all in real-time.

After all, each Discourse community is a vast trove of data that gets constantly updated by all the main actors of whatever that community is about: be it a video game, a product or a fan club.

It didn't take long for our community to request an official MCP server for Discourse, and after considering many approaches on how to quickly deliver something useful to our very diverse user base we are happy to introduce the Discourse MCP CLI.

CLI?

At first, we planned on simply adding a basic MCP server to Discourse itself, under a new route in the main Rails app, but after evaluating the pros and cons of that approach we chose to not start there. The MCP and LLM ecosystem moves fast, and we want to empower every single community that wants to create new experiences using this new technology. So we chose to ship our first iteration of the MCP server as a standalone command-line interface (CLI) that is compatible and can connect to every Discourse instance.

This means you don't have to bother your friendly Discourse admin for an update or to tweak with settings to start experimenting. Our MCP CLI leverages the comprehensive Discourse REST API and translates it into the MCP format, achieving max compatibility and making it easy for us to add new features, while also inheriting features like API keys scopes and rate limits.

Getting Started

The Discourse MCP CLI is available now and works with any Discourse instance.

To get started, you'll need:

- Node.js installed on your machine

- Your preferred AI assistant that supports MCP

Install the CLI via npm:

npm install -g @discourse/mcp@latest

That's it! Your AI assistant can now interact with your Discourse community, search topics, read posts, and even help moderate discussions based on your permissions.

Using with Claude Desktop

For Claude Desktop, edit your claude_desktop_config.json file. You may need to add an env entry with the PATH for your node install.

{

"mcpServers": {

"discourse": {

"command": "npx",

"args": ["-y", "@discourse/mcp@latest"]

}

}

}

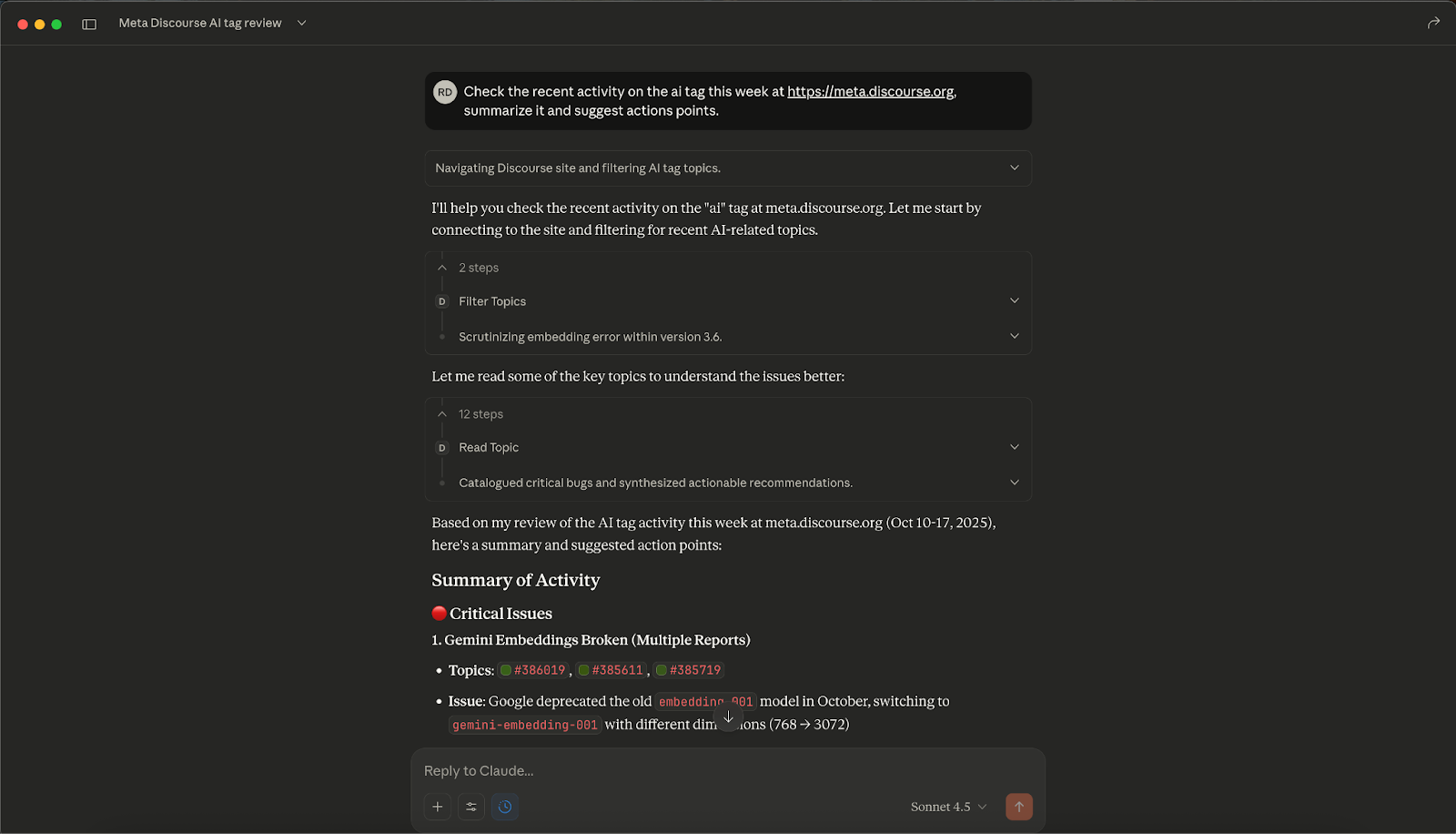

Claude excels at analyzing long threads, helping with moderation decisions, and drafting thoughtful responses that match your community's tone. It's particularly useful for community managers who need to stay on top of multiple active discussions.

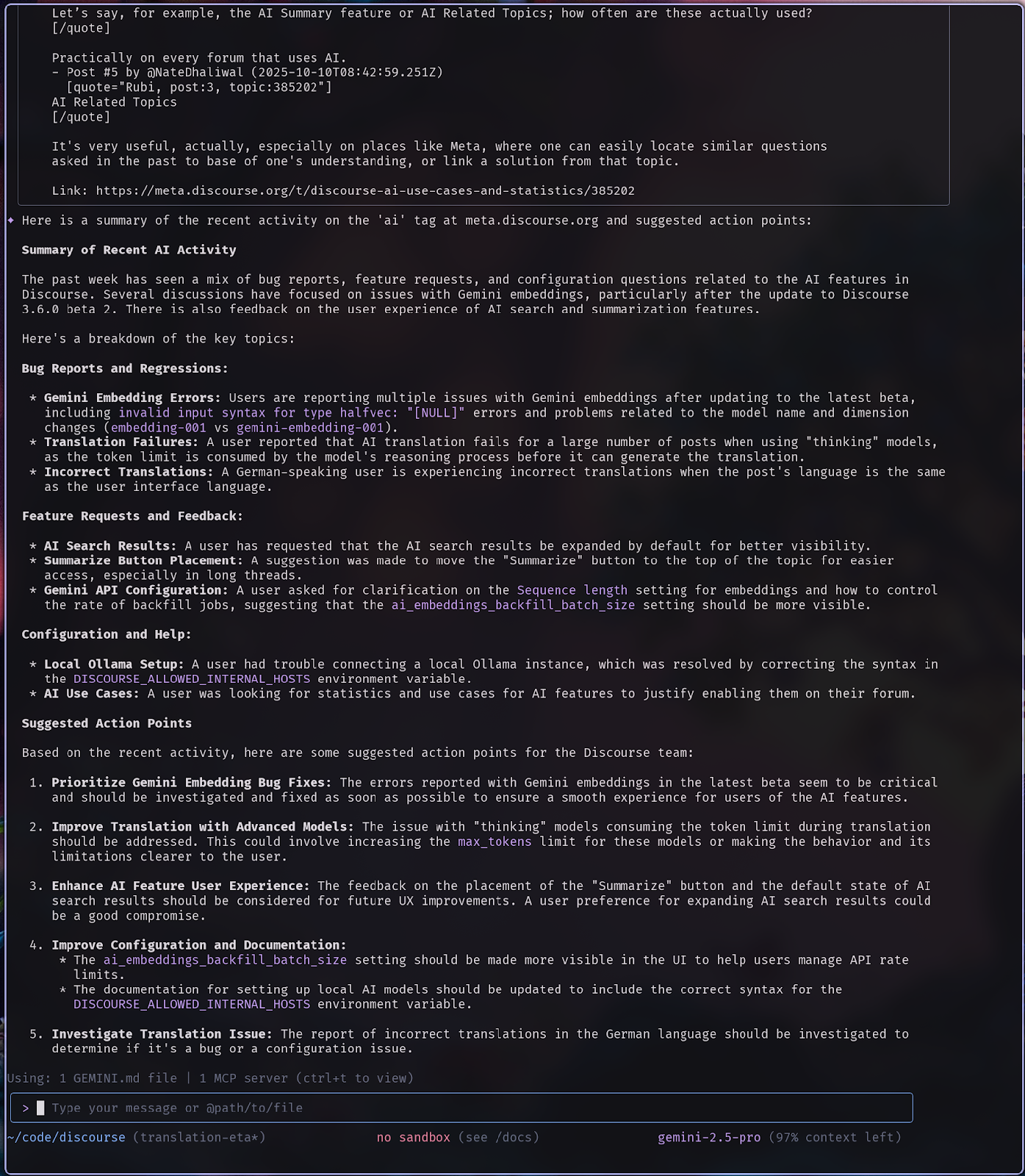

Using with Gemini CLI

The Gemini CLI supports MCP through a similar configuration pattern. Add this configuration bit to the file file at ~/.gemini/settings.json:

"mcpServers": {

"discourse": {

"command": "npx",

"args": ["-y", "@discourse/mcp@latest"]

}

}

Gemini's multimodal capabilities make it especially useful for forums with lots of image content or technical documentation.

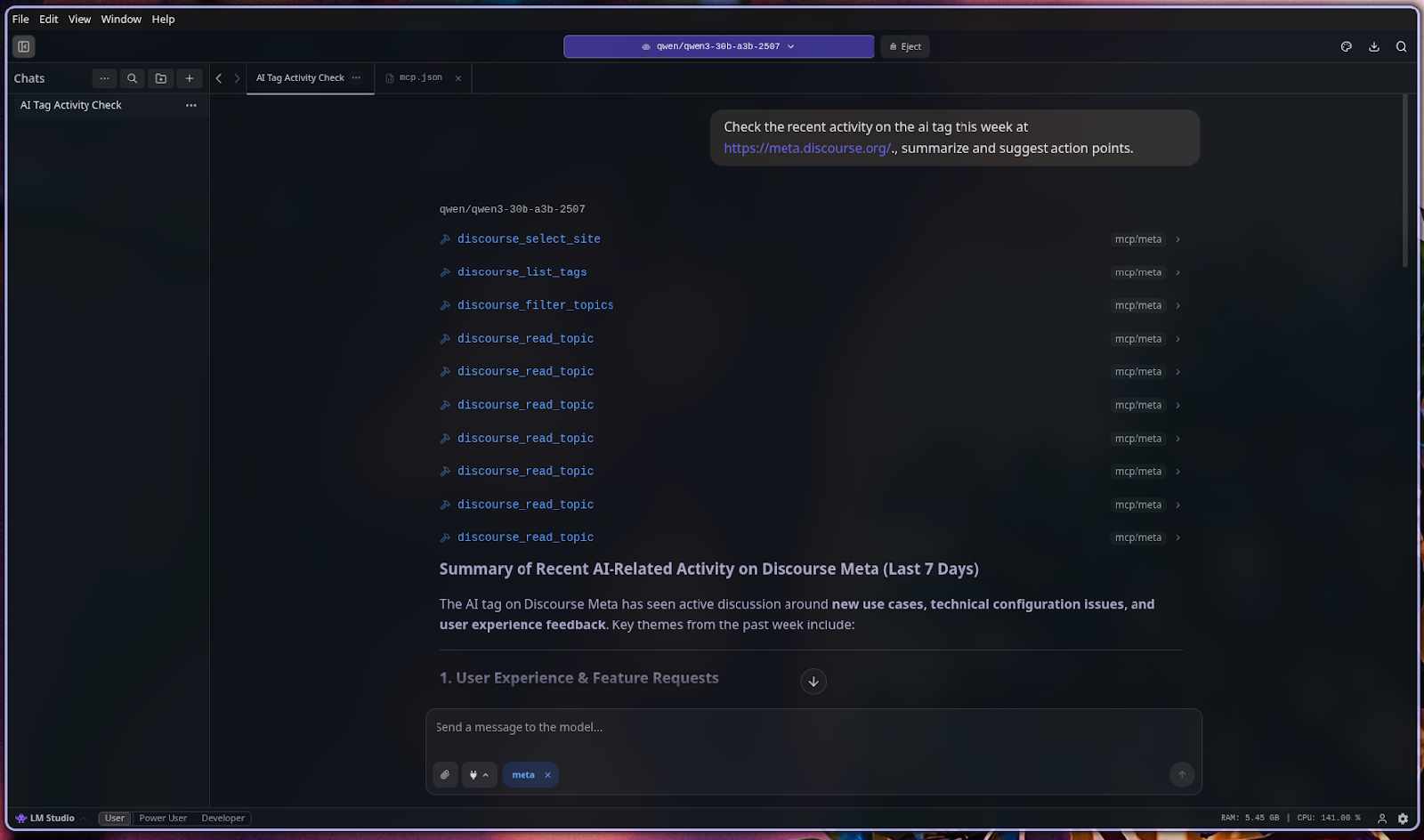

Using with LMStudio

LMStudio users can integrate Discourse MCP by adding it to their MCP server configuration. This allows you to use locally-run models while still accessing your Discourse data securely. In LMStudio's settings, navigate to the MCP section and add:

{

"name": "Discourse",

"command": "npx",

"args": ["-y", "@discourse/mcp@latest"],

"environment": {}

}

This is perfect for communities that want to experiment with AI features while maintaining full control over their data and model choices.

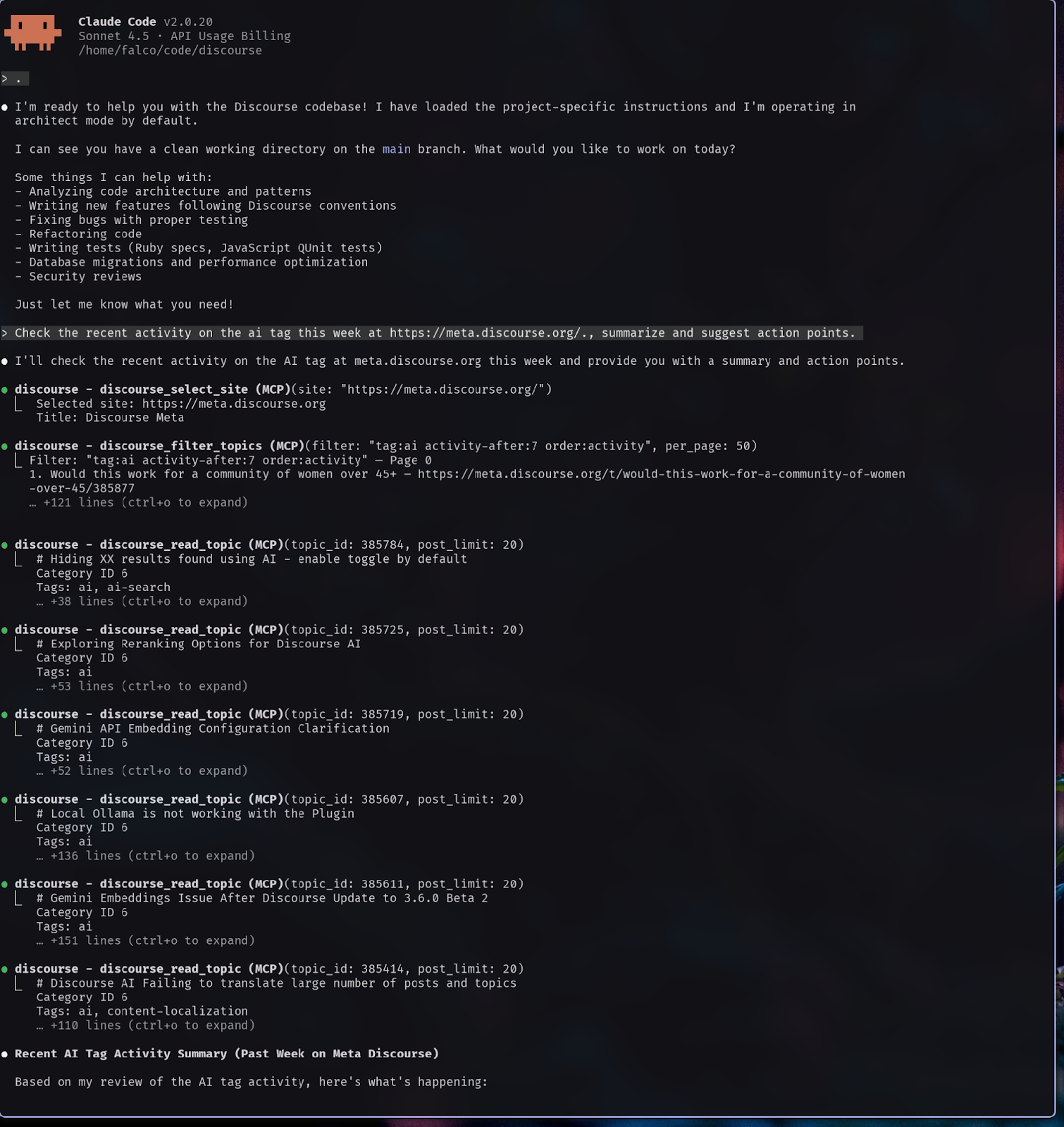

Using with Claude Code

For developers using Claude Code, the Discourse MCP integrates seamlessly into your terminal workflow. Add the server to your workspace configuration:

discourse-mcp configure --workspace

Now you can ask Claude Code to help you build plugins, analyze user behavior patterns, or even generate code to automate community management tasks, all while having direct access to your forum's data.

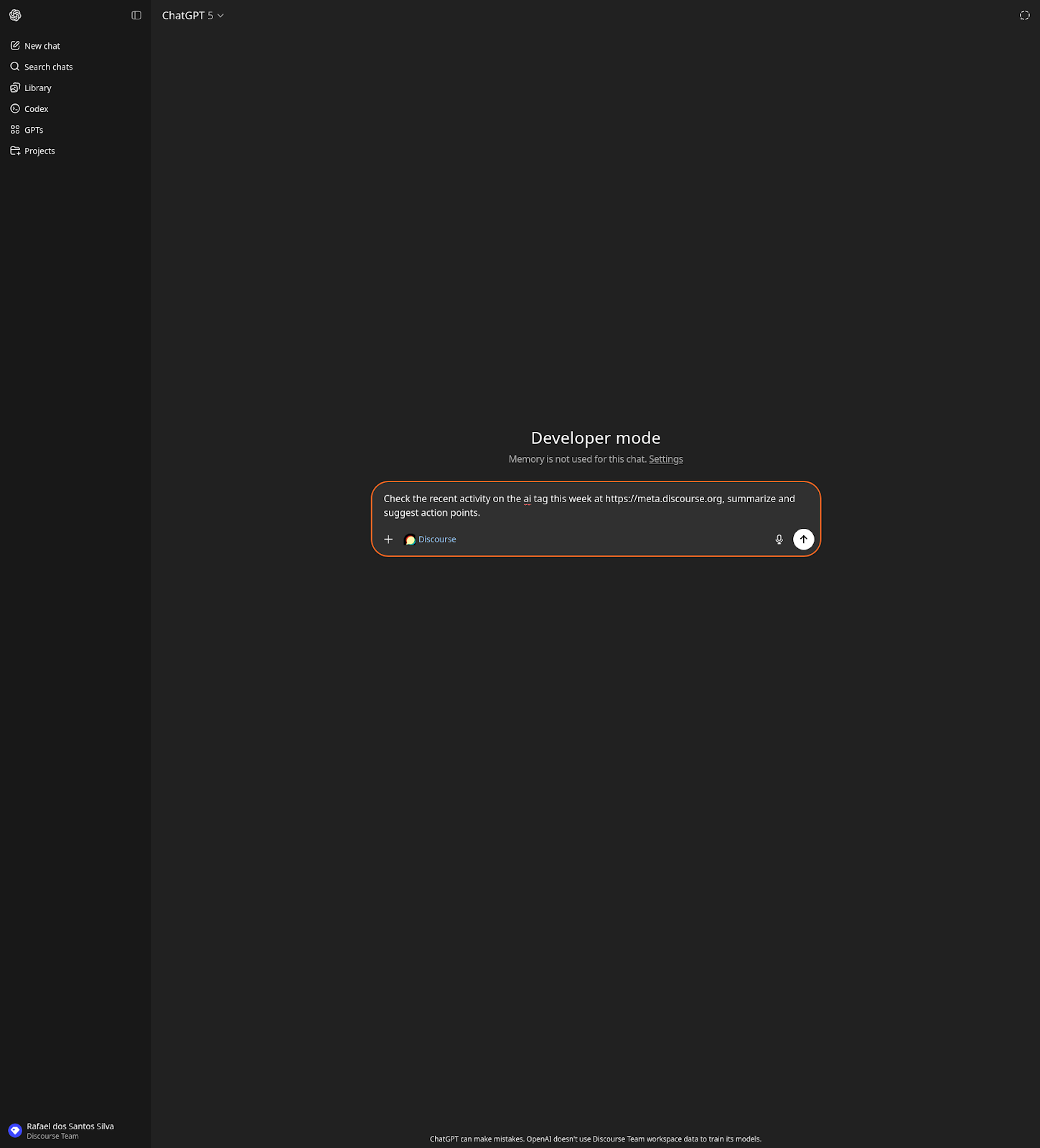

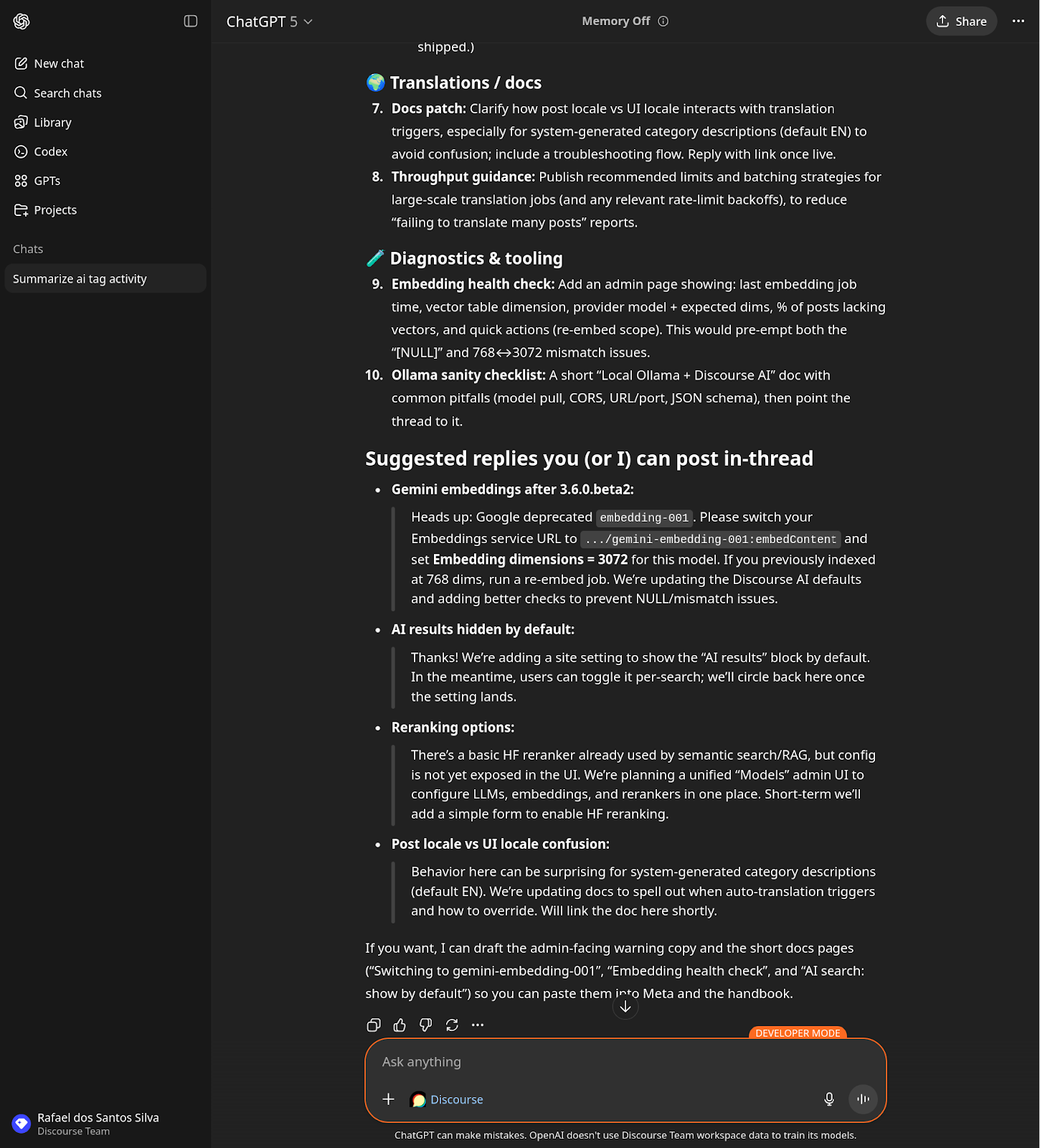

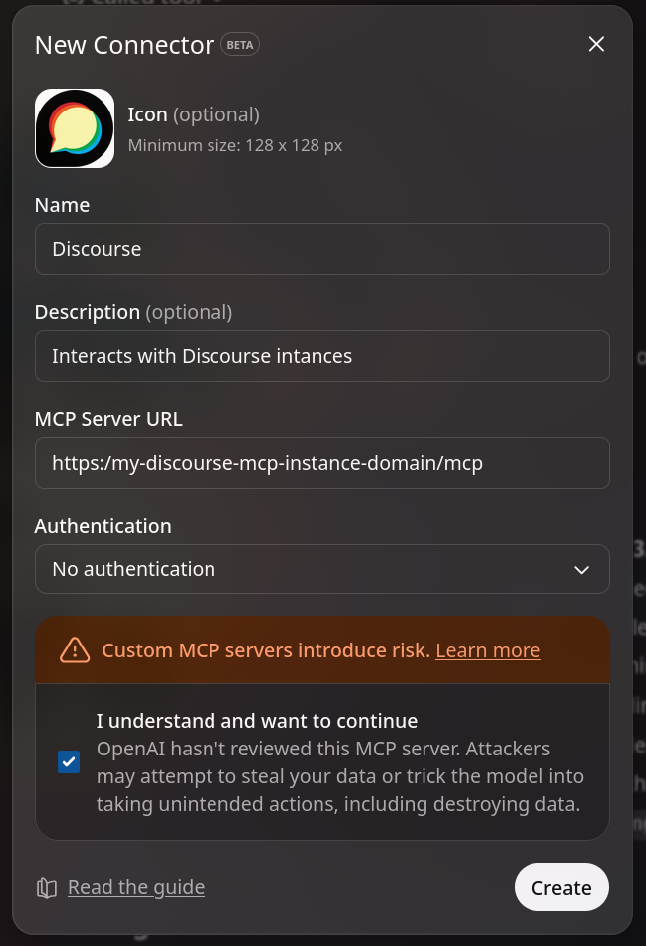

Using with ChatGPT

Using ChatGPT is a bit more involved than the other options. First you will need to enable Developer Mode.Then you will need to run discourse-mcp with --transport http and use some tunneling software to expose it to the internet. And then you will need to create a new Connector that looks like this:

And enable it on a new chat under the plus sign. This is more involved than the other options, so hopefully this becomes easier when it comes to ChatGPT Desktop.

What's Next?

This is just the beginning. We're actively working on expanding the capabilities of the Discourse MCP CLI, including:

- Support for writing and publishing posts (with appropriate permissions)

- Advanced moderation tools powered by AI

- Community insights and analytics queries

- Integration with Discourse's plugin system

- Support for webhooks and real-time notifications

We built this as open source because we believe the best features come from community collaboration. Whether you're a community manager looking to streamline moderation, a developer building AI-powered forum experiences, or just someone curious about what's possible when you combine rich community data with powerful AI models, we'd love to hear from you.

Do you have any ideas on how to use Discourse MCP? Are you already using it? Want to request a new feature? Let us know in the comments!