Standing on the Shoulders of a Giant Elephant: Upgrading Discourse to PostgreSQL 13

When the Discourse project started, way back in 2013, the team had to pick and choose between a handful tools into what would become our "stack", the foundational software on which Discourse would be built upon. Some choices proved sub-optimal early, but we were able to quickly migrate over, like for example our migration from CoffeeScript to Javascript.

On the other hand, most of our choices proved to be great, with picking PostgreSQL for the database being the finest. To illustrate how happy we are with it, let's talk about our favorite feature of PostgreSQL latest version: B-Tree deduplication.

A little back history into our hosting service

While Discourse is, of course, 100% open source software first and foremost we are a hosting company. And since we started our commercial hosting services back in 2014, we grew our hosting into serving over 400 millions page views and storing over 4 million new posts each month.

All this data is stored into PostgreSQL instances, so as you can imagine we were very interested when the PostgreSQL 13 release notes contained news about "significant improvements to its indexing and lookup system that benefit large databases, including space savings and performance gains for indexes". It even made us consider breaking from our tradition of skipping the PostgreSQL odd versions and only upgrade every two years. And in order make an informed decision we had to benchmark.

Activate the Shrink Ray

In order to evaluate if the new B-Tree deduplication feature would benefit Discourse in any way, we decided to check if it would have effect in what is the largest table in most Discourse instances in our hosting, the posts_timings table. This tables stores read time of each user in each post and is defined as:

discourse=# \d post_timings

Table "public.post_timings"

Column | Type | Collation | Nullable | Default

-------------+---------+-----------+----------+---------

topic_id | integer | | not null |

post_number | integer | | not null |

user_id | integer | | not null |

msecs | integer | | not null |

Indexes:

"index_post_timings_on_user_id" btree (user_id)

"post_timings_summary" btree (topic_id, post_number)

"post_timings_unique" UNIQUE, btree (topic_id, post_number, user_id)

We are also investigating if we can drop the post_timings_summary index, as it's a subset of the left-most columns in the post_timings_unique one, which means it can potentially be re-used.

Update (August 2021): We removed the post_timings_summary index.

In a particular instance we host, this table recently just went over a billion rows, so we used this number of rows for our test. Also, since in a live system this table receives a constant influx of updates, due to the MVCC we can end up with quite a bit of "bloat" that can skew our analysis. So in order to compare in a clean environment we used brand new installs of the last release of both 12 and 13 pg versions. After loading each version, the numbers are as follows:

Total table Size

PostgreSQL 12: 114 GB

PostgreSQL 13: 85 GB

A 25% reduction in the relation size? That's awesome! 🥳

Digging into specifics we have:

PostgreSQL 12

Table: 42 GB

Index: 72 GB

PostgreSQL 13

Table: 42 GB

Index: 43 GB

As foretold in the release notes, the optimization only applies to the index, and we can reproduce it here. The table size is still the same, but the index size is almost half.

If we enhance it further:

PostgreSQL 12

relation | size

--------------------------------------+------------

public.post_timings | 42 GB

public.post_timings_unique | 30 GB

public.index_post_timings_on_user_id | 21 GB

public.post_timings_summary | 21 GB

PostgreSQL 13

relation | size

--------------------------------------+------------

public.post_timings | 42 GB

public.post_timings_unique | 30 GB

public.post_timings_summary | 6939 MB

public.index_post_timings_on_user_id | 6766 MB

Again, as expected, the UNIQUE index that by definition has 0 duplication saw no change in it's size, but the indexes with repeating values got optimized into just a third of their original size.

Not only index size changes, but also performance. According to the PostgreSQL documentation on the topic:

This significantly reduces the storage size of indexes where each value (or each distinct combination of column values) appears several times on average. The latency of queries can be reduced significantly. Overall query throughput may increase significantly. The overhead of routine index vacuuming may also be reduced significantly.

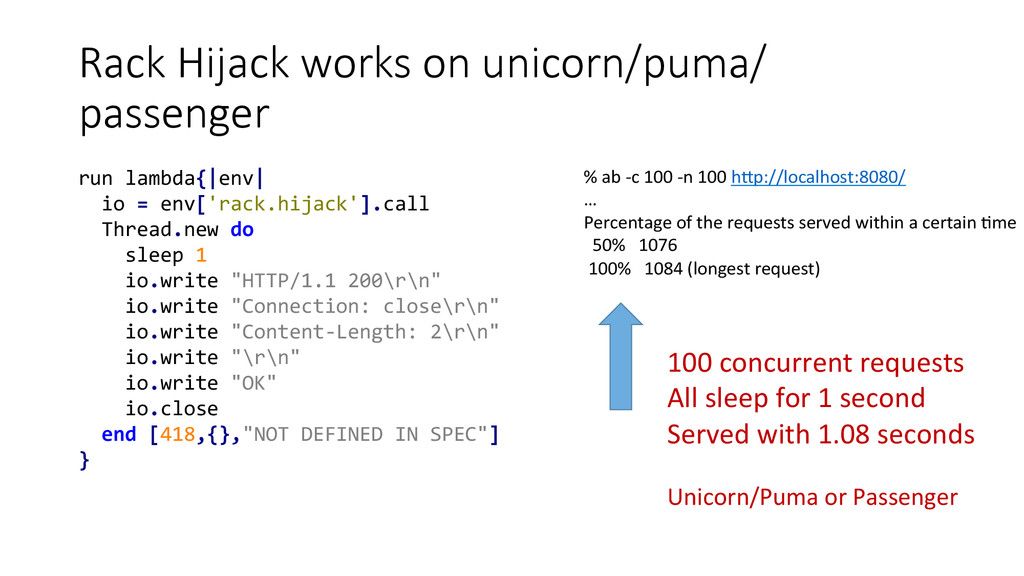

They also add a caveat that for write-heavy workloads with no duplication will incur a small fixed performance penalty. It's not our case here, but if it was this would be alleviated by the fact that this is written in a completely async code path in our application: it's a background request in our client and a non-blocking route in our Rails app that leverages Rack Hijack.

So the prophecy was true: PostgreSQL 13 brings significant improvement to Discourse!

That's a big deal, because here we saw the effect in one table in a single database, where our database schema has dozens of tables. And we host thousands of Discourse instances, with multiple PostgreSQL instances each for High Availability, so the gains are multiplied many times over.

Discourse ❤️ PostgreSQL

As we said in Discourse Gives Back 2017, Discourse has always been a 100% open source project that builds upon the decades of hard work of many other open source projects to survive. As we grow we're happy to be able to also contribute directly to funding the projects we rely on the most. That is why last year we made another monetary donation to the PostgreSQL foundation and we aim to do the same every year.