Dealing with Toxicity in Online Communities

Online communities are places where people should be able to come together to share ideas and learn from each other in an environment that is safe and enjoyable to participate in. In healthy communities, members follow established guidelines and norms to create a culture of collaboration and mutual support. Occasionally though, cracks can start to appear and if they are not addressed quickly, a culture of toxicity can creep in. If that is allowed to gain momentum, the community becomes a hostile environment which marginalises people.

What does toxicity look like?

Toxicity in communities most commonly manifests in one of the following ways:

- Consistent negativity towards a brand or mission

- Vocal members dominating discussion to the detriment of others

- Derogatory treatment of new members

- Bullying of individuals or demographics

Why does it happen?

The most common cause is the absence of clearly documented guidelines and consistent moderation practices. Culture is very hard to change, so if expectations aren’t communicated properly from the outset and the community begins to spiral into toxicity, it can be difficult to claw back out of. Similarly, if published guidelines aren’t firmly and consistently enforced, unhealthy patterns may start to emerge.

Most frequently this occurs when moderators turn a blind eye to bad behaviour from prolific posters for fear of ostracising them. Community Managers who have engagement targets to reach will sometimes allow vocal power-users to behave in unhealthy ways because removing them from the community could have a detrimental effect on engagement metrics, especially if they are long-standing members that post frequently and answer lots of questions. Sadly, that is a short-sighted approach which will end up compromising the community as a whole.

Toxic behaviour can be slow to permeate but quickly gathers momentum and can result in a skewed culture where members behave in negative and proprietary ways, undermining the social structure of the community. It often starts slowly with one or two people using aggressive language or subtle bullying tactics under the guise of being helpful. A moderator may reach out to them with an informal warning which sometimes softens the behaviour temporarily, but it generally ramps up again and then starts to serve as an example for other members.

Sadly, toxicity is quite contagious and if tolerated, can quickly become the norm, derailing healthy discourse and alienating new members. To put this in another context, if you were confronted by a rude diner every time you ate at your local restaurant, would you stick around or find another place to eat?

There are also occasions in which toxicity can ramp up quickly – usually in response to a specific event. Think along the lines of software releases that aren’t robust, products that are greatly hyped but under-deliver, or unexpected microtransactions in games. When reasonable feedback from the community is ignored, people quickly get frustrated and feel disenfranchised. The root cause is different, but the trajectory is rapid and the end result is the same without swift intervention.

Take, for example, the Battlefront loot box debacle of 2017, the most downvoted post in Reddit history. The microtransactions were removed even before the game release, but the toxicity was already so widespread that the game never really recovered and new content development was halted after just 2 years of support.

Regardless of the cause and momentum of the negativity, it is vital to put a stop to it quickly and firmly.

How can it be avoided?

Online communities are not a democracy, as much as long-tenured members would often like them to be. It is the responsibility of a Community Manager to set the terms of engagement and to enforce them for all members.

Start by setting the ground rules:

- Have a clear set of documented guidelines for participating in the community.

- Ensure those guidelines are shared with all new members when they join. Include a link in your onboarding email or message and have an obvious link on your homepage.

- Remind new members of the rules frequently so they become ingrained in your culture.

- Don’t be afraid to remind people of the rules and to make it very clear that the community is heavily moderated.

- Remember that kindness and civility can also be contagious, so set positive examples for people to follow, and celebrate kind and positive interactions.

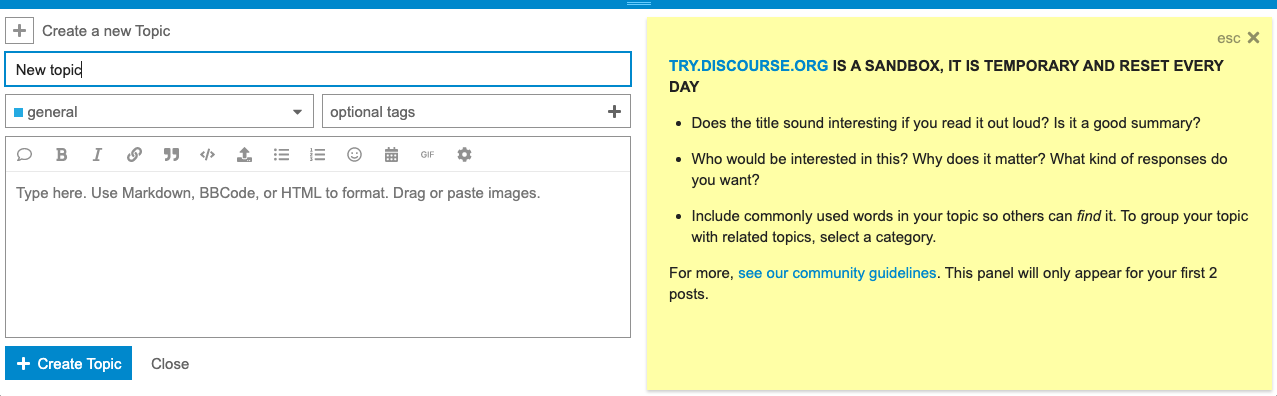

Discourse uses ‘just in time messaging’ to remind people the first few times they post.

Once you’ve set clear expectations, be prepared to enforce them rigorously. If you notice a pattern of behaviour emerging with an individual that contravenes your guidelines, start with a discussion. Send a personal message explaining that you appreciate their contributions but you have noticed some concerning behaviour. Explain that it’s important that their public conduct matches the quality of their content. Be polite and brief, but explicit, and most importantly focus on the behaviour not the individual. Sometimes that is all it takes to get someone back on track.

Sadly though, that’s not always the case. Habitual bullies and trolls tend to fight back against authority, often touting accusations of censorship. It’s important to call out that moderation and censorship are very different things. Moderation is designed to ensure that discourse remains civilised, that discussions remain relevant and on topic, and that behaviour falls within community guidelines. Censorship is the act of suppressing or silencing disagreement or harmful speech.

When you come up against this or a similar situation, the ‘3 strike rule’ is a good one to fall back on. If the initial discussion didn’t work, send an official warning. No change? Enforce a “cool off period” with a one-week ban. If that still doesn’t work, ban them permanently. It doesn’t matter how valuable their contributions are – the job of a Community Manager is to protect the safety and enjoyment of the entire community, not just the vocal few.

What if you’re too late?

If you find yourself in a situation where things are escalating quickly due to a specific incident, act fast. Contain all related discussions in one place and respond quickly acknowledging people’s frustration. Do not ignore or argue with criticism, but clearly communicate that only constructive feedback is appreciated but that rudeness is not ok. Guide people to create the kind of culture they want to be a part of. Lead by example and others will follow.

Use the tools at your disposal

Discourse ships with a number of robust moderation tools which will support you in creating a healthy community culture.

- Flags allow the community to define culture by calling to account bad behaviour.

- Topic timers can be used to temporarily close topics that are becoming heated.

- Slow mode is a good way to limit frequent or vocal poster from dominating a topic.

- Staff notices and staff posts are a good way to add public behaviour reminders mid-topic.

- User Notes provides the ability to share notes with other staff about a user.

- Watched words assist with moderating bad language or common trigger words.

- Official warnings can be issued when things start going downhill.

- Silencing a user stops them from posting or messaging other users.

- Suspensions block members from logging in for specific time periods (or permanently).

- Unlisting topics can be used to hide discussions while untangling difficult situations.

- Public topics can be converted to PMs if you need to take a discussion private.